This is part 5 of our 8 SEO Gifts for Chanukah series breaking down some basics of Search Engine Optimization.

Introducing Your Best Search Engine Friend

Crawling the internet is like swimming through every drop of the Pacific Ocean. Imagine starting in Sydney, heading up to Seattle, going back across to Tokyo before ending at Viña del Mar. This would be a massive enough ordeal to do once, now imagine doing that billions of times a day in an ever growing ocean.

This is the life of a Searchbot. It crawls, caches and indexes the internet at a frightening speed so you can find that New York Times article moments after it’s published. This is why people love search engines so much and why the most popular one, Googlebot, averages 5,134,000,000 searches a day.

How does Searchbot Work?

The Searchbot is the software used by the most popular search engines to search and crawl the web. To some it looks like this, but to others it’s more like this. However you view it, the searchbot creates a searchable index that is constructed for the search engine.

Directing Searchbot

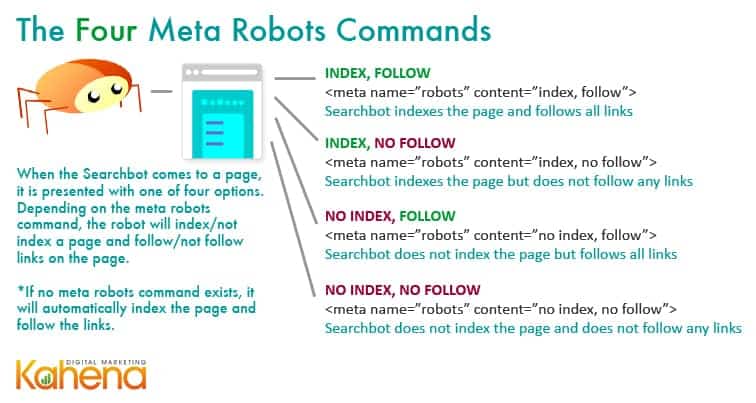

The neat thing about the Searchbot is your ability to control it’s movements throughout your site, as well as the pages it follows and indexes. As Searchbot crawls your site, if unhindered by code, it will naturally follow, or pass Page Rank to, each link it comes across on a page. It will also index, or read and make viewable on search engine results, all linked to pages on your site.

Sometimes, though, you don’t want Searchbot to follow or index a page. An example of this would be a login page to the backend of your website. The last thing you’d want is for your customers to search for your page and find access to the backend of your site on a search engine! Also, maybe you want search engines to follow all the links on a page, but not index that page, due to the fact that it lacks content but contains good links. For this you would ask the bot to follow but not index the page using the meta robots command.

Controlling Searchbot’s Activity With Robots.txt

There are also time you want an entire directory blocked from the Searchbot. Robots.txt is a file placed in the root of the domain of your site and controls the Searchbots actions throughout the site. The biggest different between Robots.txt and the Meta Robots Commands is that Robots.txt will prevent the Searchbot from entering an entire section, or the entirety of your site, whereas Meta Robots Commands only apply to the page which the code is placed on.

Here Are the Four Meta Robot Commands for Each Page On Your Site:

How to Assist Searchbot’s Indexation of Your Site

The best way to streamline the Searchbot’s indexation of your site is to post a site map. This way you can be guaranteed the Searchbot will crawl all the pages you want to be viewable in search results. In general, if you have a good linking structure set up within your site, you shouldn’t be too worried about Searchbot missing a link. However, a site map is a good idea if:

- You have dynamic, non-SEO friendly content (like flash or javascript)

- Your site is new and does not have many external links linking to it

- You are unsure of how well the internal site structure is set up

- You are an e-commerce site and want your products to be displayed in search engine results.

Awesome Tools to Crawl Your Site and see what Searchbot sees

- Screaming Frog and Feed the Bot can crawl your site just like Google-bot

- Fetch as Google allows you to crawl up to 500 URLs a week and is a great troubleshooting tool.

- Submit URL – only for new sites

- Webmaster Tools – A number of metrics here to measure how your site is performing vis a vis the crawl

- Submit Sitemap – this can help or hinder so look into it before you do so.

| Googlebot has a sense of humor, here are some who’ve indulged it: |

| http://store.nike.com/robots.txt |

| http://explicitly.me/robots.txt |

| http://yelp.com/robots.txt |

| http://www.tripadvisor.com/robots.txt |

| http://catmoji.com/robots.txt |